Kevin Cuzner's Personal Blog

Electronics, Embedded Systems, and Software are my breakfast, lunch, and dinner.

Cloning Simulink...in Python

For a while now I have been working on a bench supply. As part of this I have been trying to get a PID controller to work. At first it was simple, but after asking my Dad about it (he does power electronics), he suggested that I use a cascaded PID loop for controlling the voltage and current using the voltage alone. I have sort of a bench model going, but I don't really want to start construction until I have everything finalized since blowing things up and making mistakes is kind of expensive for my meager college student budget. Tweaking that without a working bench model that I am willing to blow up is kind of hard, so I started trying to figure out how to simulate it. Being partial to simulink (I've used it before with some nice pre-built blocks), I wanted to be able to lay it out graphically like control system diagrams usually show and I also wanted to be able to view plots over time. So thus was born my latest project: SimuPy.

Python seemed like an ideal language for this since I wanted it to look nice, be extensible, and be almost universally cross platform. I am relying heavily on the Qt library because it runs on almost anything and it has the ability to use slots and signals on pretty much any object as well. I guess another option would have been Java, but seeing as I don't like Java that much, Python was what I went with. In addition, I am weighing a couple other options:

- At the sacrifice of portability, I could make the interface half in C++ and half Python so that I still keep the extensibility without having the requirement of installing python on the person's computer to get it to work. The simulation part would then turn into a python library that the program would load.

- Write the entire thing in C++ for speed and true multithreading and use Python solely for extensions. This would be a little more difficult since I kind of leverage the dynamic typing thing that Python has going on.

So far, my current structure has everything based on a Model which contains Blocks. In addition, there is a simulation Context which holds information about each Block and where the simulation is in terms of the current step (simulations are stepped over time (dt)). Contexts are also where a Block will store all of its information that it needs to retain during the current step and in the next step. A Block is an operation over time in the flow of the simulation: it could be a simple addition, maybe a derivative or integral, or it could be a full PID controller. Blocks declare themselves to have a number of Input objects and Output objects. Inputs/Outputs are named and have a slot/function called set which sets the value of the input or output. Outputs have a signal called 'ready' which inputs connect their 'set' slot to. When an output's 'set' method is called, it emits its signal. When an input is set and it sees that all inputs attached to its block are set, it performs a "step" on the block. In addition, there are 3 special blocks: An EntryBlock, ExitBlock, and ModelBlock. Entry and Exit blocks are used in models since a model can have "Entry" and "Exit" points. These points can be used to loop a value from an Exit to an Entry (if they have the same name) or can be used as Input and Output objects if the Model is placed inside a ModelBlock. ModelBlocks are blocks which contain a model which they execute in a child simulation Context to their context. In this way, blocks can be nested. If one creates a Model with 2 Entries and 2 Exits with a pair of those Entries and Exits having the same name and then the Model is attached to a ModelBlock, the ModelBlock will have 1 input and one output to corrispond to the free Entry and Exit on the Model. Models can't be recursive, but they can be nested so as long as a higher level block doesn't contain a block which at some child level contains the same higher level block, there can be some sense of re-usability and modularity to a simulation.

Blocks are subclassed into a package called model. The __init__.py file in the model package defines the basic form for a block and then the individual modules in the package define more specific blocks. The blocks then have their constructors cached by reflection so that a block can be constructed by simply naming its name. To extend the blocks available in a simulation, all that must be done is to drop the new module python file into the model folder. I am considering changing this a bit to separate out user-added modules from the "system" modules in kind of the same fashion as I did with the WebSocketServer where I had the files in a folder be loaded into the context of another package.

Simulations are to be stored in an XML format which is going to be more or less human readable and should preserve the look and feel of the simulation. I am still working on the exact format at the moment, but that is the next step.

As for the GUI, I plan on using Qt since it seems the most cross-platform (sorry GTK...Windows needs too much help to load you and PyDev in eclipse doesn't like the whole introspection thing). I plan on releasing the project under the Apache License (but don't yet quote me on that or hold me to it...I may choose a different license later once I get more of a feel for how the project would be used). Either way, I plan on publishing the source code on github since it looks like nothing like this really exists in a simple form. Sure, there are clones of Simulink to work with Octave and things like that, but it doesn't look like there are few, if any, stand-alone applications that do this (except perhaps a paid program called logic.ly, but this should be able to duplicate the functionality of that program as well). I guess it is kind of a niche market since the only people who do this kind of thing usually can afford Simulink and Matlab.

For the record, I do have access to Simulink and Matlab through the University I am attending, but where would the fun be in that?

KnockoutJS and Memory Usage

Recently at work I have been using KnockoutJS for structuring my Javascript. To be honest, it is probably the best thing since jQuery in my opinion in terms of cutting down quantity of code that one must write for an interface. The only problem is, however, that it is really really easy to make a page use a ridiculous amount of memory. After thinking and thinking and trying different things I have realized the proper way to do things with more complex pages.

The KnockoutJS documentation is really great, but it is more geared towards the simple stuff/basics so that you can get started quickly and doesn't talk much about more complex stuff which leads to comments like the answer here saying that it isn't so good for complex user interfaces. When things get more complex, like interfacing it with existing applications with different frameworks or handling very very large quantities of data, it doesn't really say much and kind of leaves one to figure it out on their own. I have one particular project that I was working on which had the capability to display several thousand items in a graph/tree format while calculating multiple inheritance and parentage on several values stored in each item object. In chrome I witnessed this page use 800Mb easily. Firefox it was about the same. Internet explorer got to 1.5Gb before I shut if off. Why was it using so much memory? Here is an example that wouldn't use a ton of memory, but it would illustrate the error I made:

Example

Javascript (note that this assumes usage of jQuery for things like AJAX):

1function ItemModel(id, name) {

2 var self = this;

3 this.id = id;

4 this.name = ko.observable(name);

5 this.editing = ko.observable(false);

6 this.save = function () {

7 //logic that creates a new item if the id is null or just saves the item otherwise

8 //through a call to $.ajax

9 }

10}

11

12function ItemContainerModel(id, name, items) {

13 var self = this;

14 this.id = id;

15 this.name = ko.observable(name);

16 this.editing(true);

17 this.items = ko.observableArray(items);

18 this.save = function () {

19 //logic that creates a new item container if the id is null or just saves the item container otherwise

20 //through a call to $.ajax

21 }

22 this.add = function() {

23 var aNewItem = new ItemModel(null, null);

24 aNewItem.editing(true);

25 self.items.push(aNewItem);

26 }

27 this.remove = function (item) {

28 //$.ajax call to the server to remove the item

29 self.items.remove(item);

30 }

31}

32

33function ViewModel() {

34 var self = this;

35 this.containers = ko.observableArray();

36 var blankContainer = new ItemContainerModel(null, null, []);

37 this.selected = ko.observable(blankContainer);

38 this.add = function () {

39 var aNewContainer = new ItemContainerModel(null, null, []);

40 aNewContainer.editing(true);

41 self.containers.push(aNewContainer);

42 }

43 this.remove = function(container) {

44 //$.ajax call to the server to remove the container

45 self.containers.remove(container);

46 }

47 this.select = function(container) {

48 self.selected(container);

49 }

50}

51

52$(document).ready( function() {

53 var vm = new ViewModel();

54 ko.applyBindings(vm);

55});

Now for a really simple view (sorry for lack of styling or the edit capability, but hopefully the point will be clear):

1<a data-bind="click: add" href="#">Add container</a>

2<ul data-bind="foreach: containers">

3 <li><span data-bind="text: name"></span> <a data-bind="click: save" href="#">Save</a> <a data-bind="click: $parent.remove" href="#">Remove</a></li>

4</ul>

5<div data-bind="with: selected">

6 <a data-bind="click: add" href="#">Add item</a>

7 <div data-bind="foreach: items">

8 <div data-bind="text: name"></div>

9 <a data-bind="click: save" href="#"></a>

10 <a data-bind="click: $parent.remove" href="#">Remove</a>

11 </div>

12</div>

The Problem

So, what is the problem here with this model? It works just fine... you can add, remove, save, and display items in a collection of containers. However, if this view was to contain, say, 1000 containers with 1000 items each, what would happen? Well, we would have a lot of memory usage. Now, you could say that would happen no matter what you did and you wouldn't be wrong. The question here is, how much memory is it going to use? The example above is not nearly the most efficient way of structuring a model and will consume much more memory than is necessary. Here is why:

Note how the saving, adding, and removing functions are implemented. They are declared attached to the this variable inside each object. Now, in languages like C++, C#, or Java, adding functions to an object (that is what attaching the function to the this variable does in Javascript if you aren't as familiar with objects in Javascript) will not cause increased memory usage generally, but would rather just make the program size larger since the classes would all share the same compiled code. However, Javascript is different.

Javascript uses what are called closures. A closure is a very very powerful tool that allows for intuitive accessing and scoping of variables seen by functions. I won't go into great detail on the awesome things you can do with these since many others have explained it better than I ever could. Another thing that Javascript does is that it treats functions as "1st class citizens" which essentially means that Javascript sees no difference between a function and a variable. All are alike. This allows you to assign a variable to point to a function (var variable = function () { alert("hi"); };) so that you could call variable() and it would execute the function as if "variable" was the name of the function.

Now, tying all that together here is what happens: Closures "wrap up" everything in the scope of a function when it is declared so that it has access to all the variables that were able to be seen at that point. By treating functions almost like variables and assigning a function to a variable in the this object, you extend the this object to hold whatever that variable holds. Declaring the functions inline like we see in the add, remove, and save functions while in the scope of the object causes them to become specific to the particular instance of the object. Allow me to explain a bit: Every time that you call 'new ItemModel(...)', in addition to creating a new item model, it creates a new function: this.save. Every single ItemModel created has its very own instance of this.save. They don't share the same function. Now, when we create a new ItemContainerModel, 3 new functions are also created specific to each instance of the ItemContainerModel. That basically means that if we were to create two containers with 3 items each inside we would get 8 functions created (2 for the items, 6 for the containers). In some cases this is very useful since it lets you create custom methods for each oject. To use the example of the item save function, instead of having to access the 'id' variable as stored in the object, it could use one of the function parameters in 'function ItemModel(...)' inside the save function. This is due to the fact that the closure wrapped up the variables passed into the ItemModel function since they were in scope to the this.save function. By doing this, you could have the this.save function modify something different for each instance of the ItemModel. However, in our situation this is more of an issue than a benefit: We just redundantly created 4 functions that do the exact same thing as 4 other functions that already exist. Each of those functions consumes memory and after a thousand of these objects are made, that usage gets to be quite large.

Solution

How can this be fixed? What we need to do is to reduce the number of anonymous functions that are created. We need to remove the save, add, and remove functions from the ItemModel and ItemContainerModel. As it turns out, the structure of Knockout is geared towards doing something which can save us a lot of memory usage.

When an event binding like 'click' is called, the binding will pass an argument into the function which is the model that was being represented for the binding. This allows us to know who called the method. We already see this in use in the example with the remove functions: the first argument was the model that was being referenced by the particular click when it was called. We can use this to fix our problem.

First, we must remove all functions from the models that will be duplicated often. This means that the add, remove, and save functions in the ItemContainer and the save function in the Item models have to go. Next, we create back references so that each contained object outside the viewmodel and its direct children knows who its daddy is. Here is an example:

1function ItemModel(id, name, container) {

2 //note the addition of the container argument

3

4 //...keep the same variables as before, but remove the this.save stuff

5

6 this.container = container; //add this as our back reference

7}

8

9function ItemContainerModel(id, name) {

10 //NOTE 1: this didn't need an argument for a back reference. This is because it is a direct child of the root model and

11 //since the root model contains the functions dealing with adding and removing containers, it already knows the array to

12 //manipulate

13

14 //NOTE 2: the items argument has been removed. This is so that the container can be created before the items and the back

15 //reference above can be completed. So, the process for creating a container with items is now: create container, create

16 //items with a reference to the container, and then add the items to the container by doing container.items(arrayOfItems);

17

18 //remove all the functions from this model as well

19}

20

21function ViewModel() {

22 //all the stuff we already had here from the example above stays

23

24 //we add the following:

25 this.saveItem = function (item) {

26 //instead of using self.id and self.name() when creating our ajax request, we use item.id and item.name()

27 }

28 this.saveContainer = function(container) {

29 //instead of using self.id and self.name() when creating our ajax request, we use item.id and item.name()

30 }

31 this.addItem = function(container) {

32 var aNewItem = new ItemModel(null, null, container);

33 aNewItem.editing(true);

34 container.items.push(aNewItem);

35 }

36 this.removeItem = function(item) {

37 //create a $.ajax request to remove the item based on its id

38 item.container.items.remove(item); //using our back reference, we can remove the item from its parent container

39 }

40}

The view will now look like so (note that the bindings to functions now reference $root: the main ViewModel):

1<a data-bind="click: add" href="#">Add container</a>

2<ul data-bind="foreach: containers">

3 <li><span data-bind="text: name"></span> <a data-bind="click: $root.saveContainer href="#">Save</a> <a data-bind="click: $root.remove" href="#">Remove</a></li>

4</ul>

5<div data-bind="with: selected">

6 <a data-bind="click: $root.addItem" href="#">Add item</a>

7 <div data-bind="foreach: items">

8 <div data-bind="text: name"></div>

9 <a data-bind="click: $root.saveItem" href="#"></a>

10 <a data-bind="click: $root.removeItem" href="#">Remove</a>

11 </div>

12</div>

Now, that wasn't so hard was it? What we just did was we made it so that we only use memory for the variables and don't have to create any closures for functions. By moving the individual model functions down to the ViewModel we kept the same functionality as before, did not increase our code size, and significantly reduced memory usage when the model starts to get really big. If we were to create 2 containers with 3 items each, we create no additional functions from the 4 inside the ViewModel. The only memory consumed by each model is the space needed for storing the actual values represented (id, name, etc).

Summary

In summary, to reduce KnockoutJS memory usage consider the following:

- Reduce the number of functions inside the scope of each model. Move functions to the lowst possible place in your model tree to avoid unnecessary duplication.

- Avoid closures inside heavily duplicated models like the plague. I know I didn't cover this above, but be careful with computed observables and their functions. It may be better to declare the bulk of a function for a computed observable outside the function and then use it like so: 'this.aComputedObservable = ko.computed(function () { return aFunctionThatYouCreated(self); });' where self was earlier declared to be this in the scope of the model itself. That way the computed observable function still has access to the contents of the model while keeping the actual memory usage in the model itself small.

- Be very very slim when creating your model classes. Only put data there that will be needed.

- Consider pagination or something. If you don't need 1000 objects displayed at the same time, don't display 1000 objects at the same time. There is a server there to store the information for a reason.

The first week or two with Arch Linux

After some frustrating times involving Ubuntu 12.04, hibernation, suspending, and random freezing I decided I needed to try something different. Being a Sandy Bridge desktop, my computer naturally seems to have a slight problem with Linux support in general. Don't get me wrong, I really like my computer and my processor...however, the hardware drivers at times frustrate me. So, at my wits end I decided to do something crazy and take the plunge to a bleeding edge rolling release linux: Arch Linux.

Arch Linux is interesting for me since its the first time I have not been using an operating system with the "version" paradigm. Since its a rolling release it is prone to more problems, but it also gives the advantage of always being up to date. Since my computer's hardware is relatively new (it has been superseded by Ivy Bridge, but even so its driver support still seems to be being built), I felt that I had more to gain from doing a rolling release where new updates and such would come out (think kernel 3.0 to 3-2...Sandy Bridge processors suddenly got much better power management) almost immediately. So, without further adieu, here are my plusses and minuses (note that this will end up comparing Ubuntu to Arch alot since that's all I know at the moment):

Plusses:

- It was actually very easy to install. Since I have had problems with net installs, I did a core install and then updated it. I practiced several times beforehand on virtual machines, including using existing partitions and such. Although the initial downloads took some time (the lack of curl in the core install was kind of upsetting since I couldn't use rankmirrors to get better speeds), after that it was pretty fast. Thanks to considerable documentation and a few practice runs, getting an X enviroment set up using Gnome 3 (and gdm...I like the graphical logins) didn't take long at all. It took a bit of coaxing (read: google + arch wiki) to get things such as networkmanager running and such, but with time I had it all figured out and I managed to get the whole system running more or less stably within a day.

- It boots faster than Ubuntu and is more explicit about what exactly it's doing. I liked the Ubuntu moving logo thing, but I do actually enjoy seeing what the computer is doing when it boots. Coming from pure asthetic reasons, it gives the computer more of a "raw" feel which for some strange masochistic reason, I enjoy. The slowest part is initializing the networks and if that didn't have to happen the entire system could boot in under 60 seconds after the bios gets done showing off its screen.

- The documentation is awesome. Clearly, people have spent lots and lots and lots of time writing the documentation in the wiki for Arch. It certainly made setting up easier since many of the random corner cases were in the troubleshooting section of severl articles and I ended up running into a couple of them. One thing that was easier to set up than in Ubuntu was suspending and hibernating (at least getting it to work reliably). With some help from the forum (see next point) and a few pages of documentationon pm-utils I got suspend, resume, and hibernate (!!!) running. I haven't even gotten hibernate to work in Windows.

- The community is great. I rarely have been able to get a question answered on the Ubuntu forums since they are so conjested. I asked a question on the arch bbs and in less than a day I had a response and was able to do some trial + error and troubleshooting involving the suspend and hibernate functionality of my computer.

Minuses:

- The rolling release model breaks things occasionally. Recently, the linux-firmware package was updated and this caused my wireless card to stop working since it could no longer find the drivers. I wasn't sure why, but I have just downgraded the package and blacklisted it for upgrades. Hopefully that doesn't kill me later (it probably will), but if it does by then I hope to have figured out what is wrong.

- With great power comes great responsibility. The sheer flexibility is great since I don't have a bunch of extra packages I don't need, but at the same time when I was practicing with the VMs, I was able to get myself stuck in a hole where the only solution was to re-format the drive. However, ever since a mishap with Ubuntu (the themeing engine changed all my stuff to black on black or white on white for the text) I have separated out my home folder from the system so that I can easily re-format and re-install the system without losing all my stuff (all 132Gb of it).

- This isn't a problem for most people, but it doesn't access the hard drive as often as other distros. Why is that a con for me? Well, I have a western digital green hard drive which has an automated parking feature which parks the heads after 10 seconds of inactivity. Well, in windows this doesn't matter, but in linux since it touches the filesystem every 11-15 seconds or so, that results on a LOT of head parkings. Considering that the heads are only rated for 300K cycles and people have reported reaching that in less than a year, it is a real issue. I have a program (wdantiparkd) which writes the hard drive every 7 seconds while watching to see if anything else has written to the hard drive so that it hangs up after 10 minutes rather than 10 seconds. It helps, but it worked better on Ubuntu.

Overall, this experience with Arch has allowed me to become much more familiar with Linux and its guts and slowely but surely I am getting better at fixing issues. If you are considering a switch from your present operating system and already have experience with Linux (especially the command line since that's what you are stuck with starting out before you install xfce, Gnome, KDE, etc), I would recommend this distribution. Of course, if you get easily frustrated with problems and don't enjoy solving them, perhaps a little more stability would be something to look for instead.

Here is my desktop as it stands:

A Working Demo of the WebSocketServer

I have finally given in and purchased some hosting at rackspace so I could put the websocketserver somewhere. The demo is live and working, so just visit the following URL to try a very super simple chatroom:

http://cuznersoft.com/python-websocket-server/html/demo_chatroom.html

Multiprocessing with the WebSocketServer

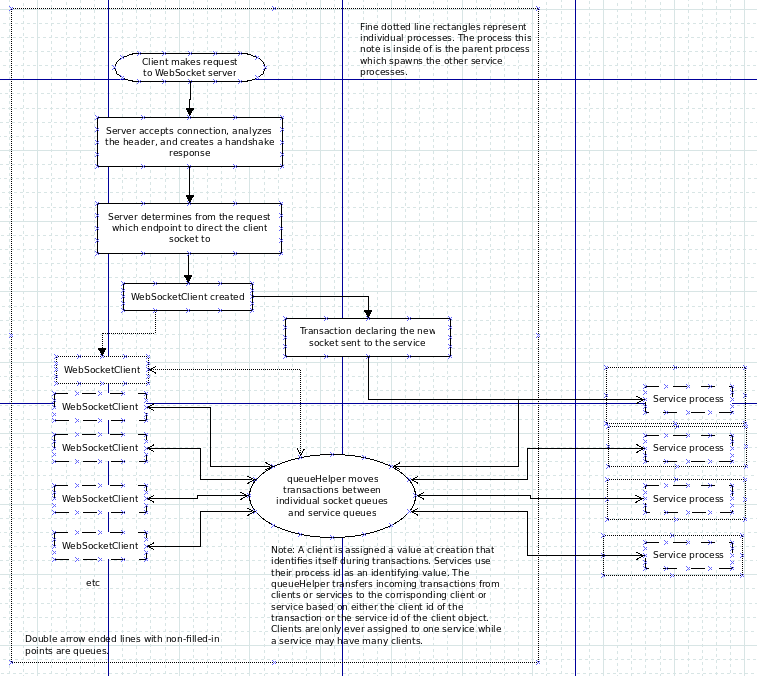

After spending way to much time thinking about exactly how to do it, I have got multiprocessing working with the WebSocketServer.

I have learned some interesting things about multiprocessing with Python:

- Basically no complicated objects can be sent over queues.

- Since pickle is used to send the objects, the object must also be serializable

- Methods (i.e. callbacks) cannot be sent either

- Processes can't have new queues added after the initial creation since they aren't picklable, so when the process is created, it has to be given its queues and that's all the queues its ever going to get

So the new model for operation is as follows:

Sadly, because of the lack of ability to share methods between processes, there is a lot of polling going on here. A lot. The service has to poll its queues to see if any clients or packets have come in, the server has to poll all the client socket queues to see if anything came in from them and all the service queues to see if the services want to send anything to the clients, etc. I guess it was a sacrifice that had to be made to be able to use multiprocessing. The advantage gained now is that services have access to their own interpreter and so they aren't all forced to share the same CPU. I would imagine this would improve performance with more intensive servers, but the bottleneck will still be with the actual sending and receiving to the clients since every client on the server still has to share the same thread.

So now, the plan to proceed is as follows:

- Figure out a way to do a pre-forked server so that client polling can be done by more than one process

- Extend the built in classes a bit to simplify service creation and to make it a bit more intuitive.

As always, the source is available at https://github.com/kcuzner/python-websocket-server

Making a WebSocket server

For the past few weeks I have been experimenting a bit with HTML5 WebSockets. I don't normally focus only on software when building something, but this has been an interesting project and has allowed me to learn a lot more about the nitty gritty of sockets and such. I have created a github repository for it (it's my first time using git and I'm loving it) which is here: https://github.com/kcuzner/python-websocket-server

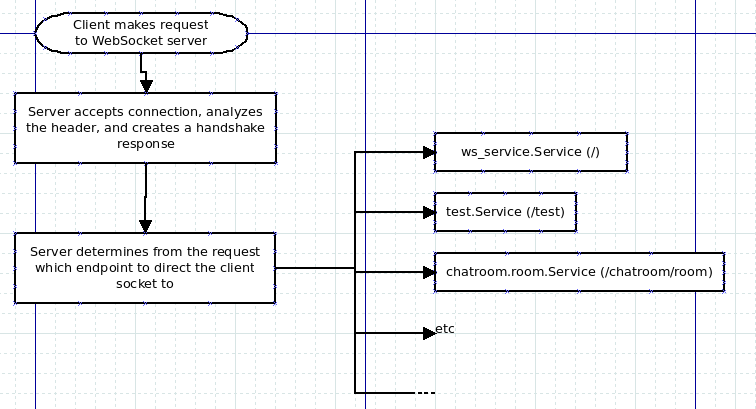

The server I have runs on a port which is considered a dedicated port for WebSocket-based services. The server is written in python and defines a few base classes for implementing a service. The basic structure is as follows:

Each service has its own thread and inherits from a base class which is a thread plus a queue for accepting new clients. The clients are a socket object returned by socket.accept which are wrapped in a class that allows for communication to the socket via queues. The actual communication to sockets is managed by a separate thread that handles all the encoding and decoding to websocket frames. Since adding a client doesn't produce much overhead, this structure potentially could be expanded very easily to handle many many clients.

A few things I plan on adding to the server eventually are:

- Using processes instead of threads for the services. Due to the global interpreter lock, if this is run using CPython (which is what most people use as far as I know and also what comes installed by default on many systems) all the threads will be locked to use the same CPU since the python interpreter can't be used by more than one thread at once (however, it can for processes). The difficult part of all this is that it is hard to pass actual objects between processes and I have to do some serious re-structuring for the code to work without needing to pass objects (such as sockets) to services.

- Creating a better structure for web services (currently only two base classes are really available) including a generalized database binding that is thread safe so that a service could potentially split into many threads while not overwhelming the database connection.

Currently, the repository includes a demo chatroom service for which I should have the client side application done soon and uploaded. Currently it supports multiple chatrooms and multiple users, but there is no authentication really and there are a few features I would like to add (such as being able to see who is in the chatroom).

Case LEDs Software

So, I have just cleaned up, documented a little better, and zipped up the firmware and host side driver for the case LEDs. The file does not contain the hardware schematic because it has some parts in it that I created myself and I don't feel like moving all the symbols around from my gEDA directory and getting all the paths to work correctly.

The host side driver only works on linux at the moment due to the usage of /proc/stat to get CPU usage, but eventually I plan on upgrading it to use SIGAR or something like that to support more platforms once I get a good environment for developing on Windows going. If you can't wait for me to do it, you could always do it yourself as well.

Anyway, the file is here: LED CPU Monitor Software

Here is the original post detailing the hardware along with a video tour/tutorial/demonstration: The Case LEDs 2.0 Completed

The Case LED v. 2.0: Completed

After much pain and work...(ok, I had a great time; let's be honest now)...I have finished the case LEDs!

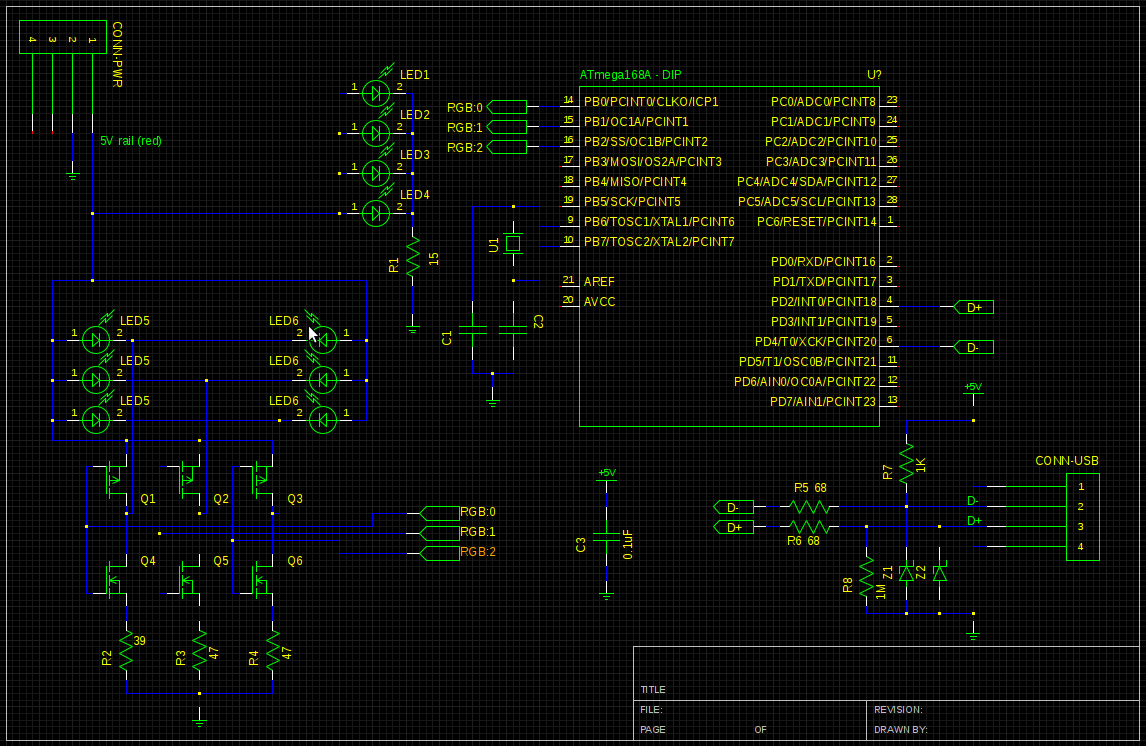

Pursuant to the V-USB licence, I am releasing my hardware schematics and the software (which can be found here). However, it isn't because of the licence that I feel like releasing them...it is because it was quite fun to build and I would recommend it to anyone with a lot of time on their hands. So, to start off let us list the parts:

- 1 ATMega48A (Digi-key: ATMEGA48A-PU-ND)

- 1 28 pin socket (Digi-key: 3M5480-ND)

- 2 3.6V Zener diodes (Digi-key: 568-5907-1-ND)

- 2 47Ω resistors (Digi-key: 47QBK-ND)

- 1 39Ω resistor (Digi-key: 39QTR-ND)

- 1 15Ω resistor (Digi-key: 15H-ND)

- 3 100V 300mA TO-92 P-Channel MOSFETs (Digi-key: ZVP2110A-ND)

- 3 2N7000 TO-92 N-Channel MOSFETs (Digi-key: 2N7000TACT-ND)

- 1 10 Position 2x5, 0.1" pitch connector housing (Digi-key: WM2522-ND)

- 10 Female terminals for said housing (Digi-key: WM2510CT-ND)

- 1 4-pin male header, 0.1" pitch for the diskette connector from your power supply (You can find these on digikey pretty easily as well..there are a lot)

- 2 RGB LEDs (Digi-key: CLVBA-FKA-CAEDH8BBB7A363CT-ND, but you can you whatever you may find)

- 4 White LEDs like in my last case mod

- 1 Prototyping board, 24x17 holes

The schematic is as follows:

The parts designations are as follows:

- R1: 15Ω

- R2: 39Ω for the Red channel

- R3: 47Ω for the Green channel

- R4: 47Ω for the Blue channel

- LED1-4: White LEDs of your choosing. Make sure to re-calculate the correct value for R1, taking into account that there are 4 LEDs

- LED5-6: The RGB LEDs. The resistor values here are based on the part I listed above, so if you decide to change it, re-calculate these values.

- Q1-Q3: The P-Channel MOSFETs

- Q4-Q6: The N-Channel MOSFETs

- Z1-Z2: The zener diodes

- U1: 16Mhz Crystal

- C1-2: Capacitors to match the crystal. In my circuit, I think they were 33pF or something

- CONN-PWR: The 4-pin connector for the diskette

- CONN-USB: The USB connector. You will have to figure out the wiring for this for your own computer. I used this site for mine. Don't forget to twist the DATA+ and DATA- wires if you aren't using a real USB cable (like me).

- C3: Very important decoupling capacitor. Place this close to the microcontroller.

As I was building this I did run into a few issues which are easy to solve, but took me some time:

- If the USB doesn't connect, check the connections, check to make sure the pullups are in the right spot, and check to make sure the DECOUPLING CAPACITOR is there. I got stuck on the decoupling capacitor part, added it, and voila! It connected.

- If the LEDs don't light up, check the connections, then make sure you have it connected to the right power rails. My schematic is a low-side switch since the LEDs I got were common anode. I connected both ends to negative when I first assembled the board and it caused me quite a headache before I realized what I had done

- Double and triple check all the wiring when soldering. It is pain to re-route connections (trust me...I know). Measure twice, cut once.

Although I already have a link above, the software can be found here: Case LEDs Software

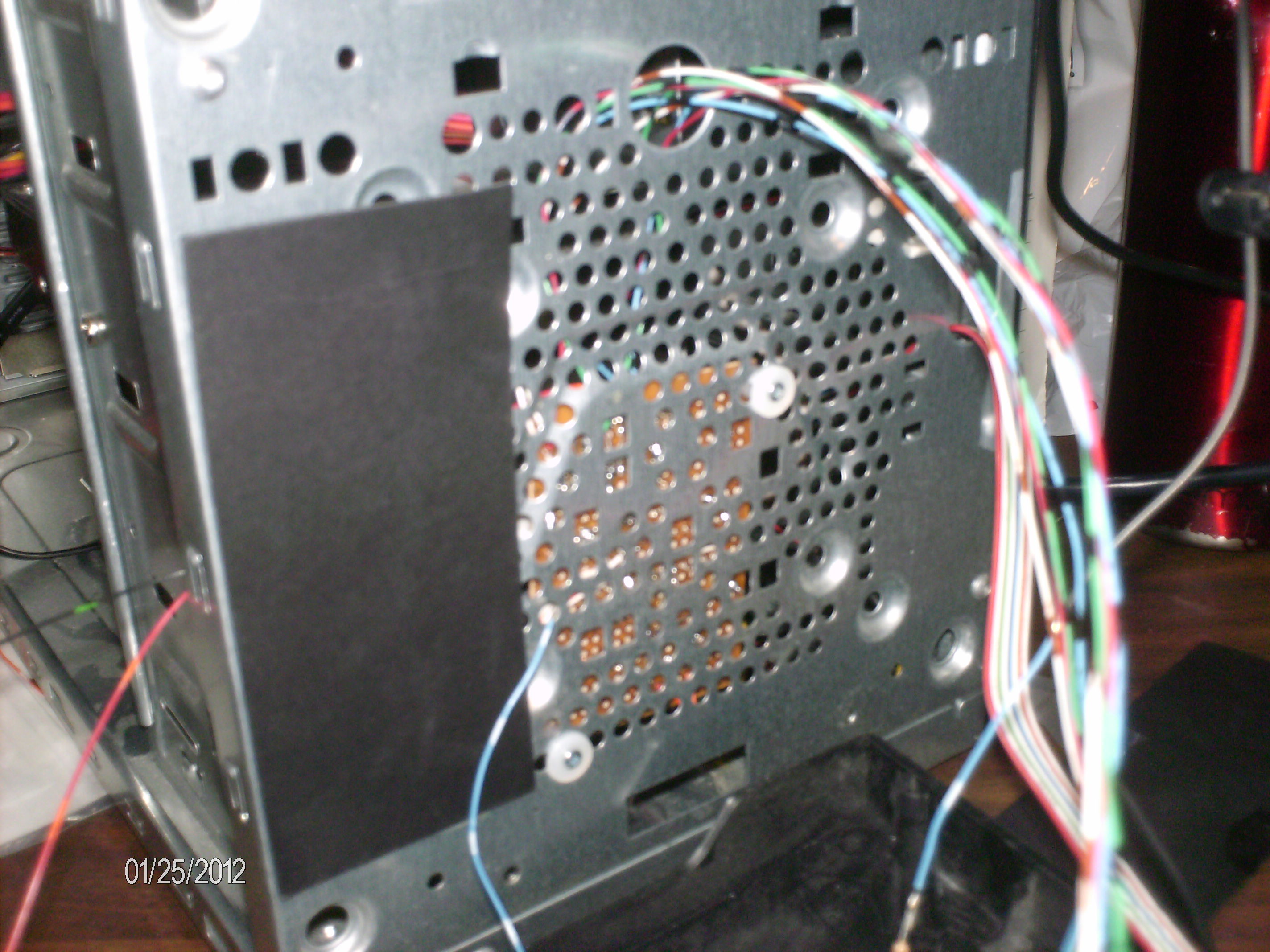

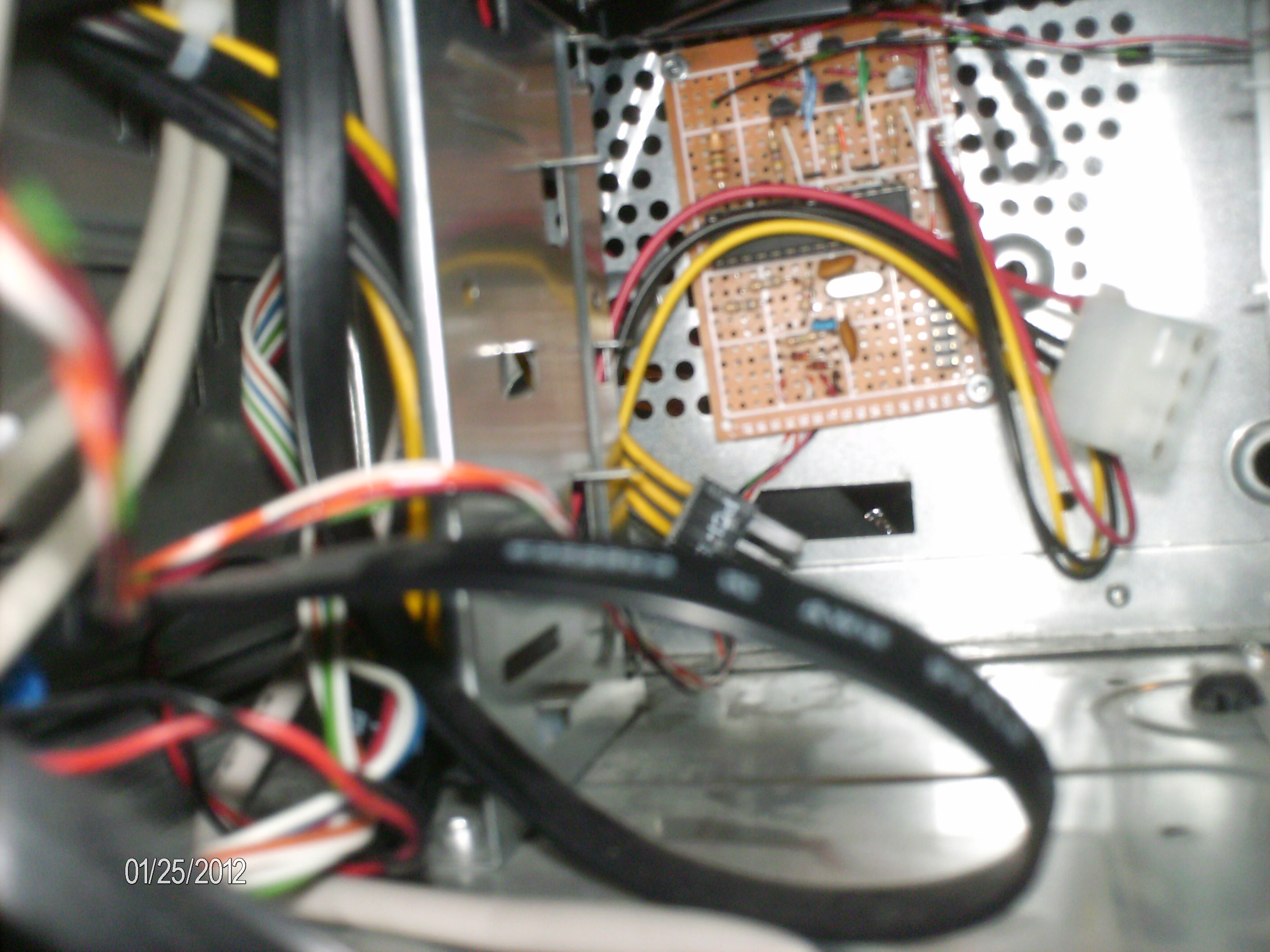

So, here are pictures of the finished product:

Yay for driver signing!

As I was working to get my led case lights ready to install (tutorial and videos coming soon!) I was trying to make the device interchangeable between windows and linux since I run both on my computer. So, I followed some ...great... online instructions which directed me to install something called ReadyDriver Plus. Basically, what it does is disable device driver signing so that you can install unsigned drivers such as libusb. Well, the first time I restarted, it did something weird. You see, I have a setup where my computer first loads GRUB and then from GRUB it will load the windows bootloader. I think that was the main problem with getting ReadyDriver Plus to work since all it is is a program that loads on boot to disable device signing in the startup menu for windows. However, because it didn't work properly it had the added effect of disabling ALL of my device drivers, including my wired keyboard and bluetooth dongle for the mouse. Great. So, I then manually told it to disable device driver signing (F8 when selecting Windows 7 from the windows bootloader, scroll down to "Disable driver signing") and it loaded again just fine. Then, I said to myself, "Oh, I can just do it that way when I want to use the lights in my computer on windows" and so I decided to un-install ReadyDriver Plus. Bad idea. Now, it doesn't show the bootloader screen for the windows bootloader and I discovered that the keyboard driver is no longer working again. So, I can't tell it to turn off device signing and I can't use the computer in windows mode. Great. Now, it seems the easiest thing to do is re-install windows. Hopefully it doesn't mind since it is on the same computer (read: motherboard) due to their crazy DRM stuff they put in windows 7.

In short, I don't think windows will be supported for my case lights just yet.

EDIT: Yay for system restore! Apparently when I installed Assassins Creed it created a nice system restore point. Now everything works great again. However, I still won't be messing with the drivers again for a while. I have to make sure I have plenty of time on my hands before I do that again just in case system restore isn't as awesome.

Case LEDs version 2.0

So, as usual after I completed my LED case mod I asked myself, how can I could make it even cooler? Thus was born the idea for Case LEDs v. 2.0.

The Idea: Wire up some LEDs so they are controlled by the computer to vary their intensity or something based on the CPU usage.

The Implementation: Using RGB LEDs, some small MOSFETs, and a microcontroller make a USB controlled light generator that takes as input a number representing CPU usage.

In the 3 weeks since I put the white LEDs in my case I have been working on this thing in my spare time (mostly weekends...homework has just been swamping me during the week) and this past weekend I finally got it to connect through the USB using the V-USB library and so I have made a lot of progress. At the moment it is perfectly capable of displaying CPU usage by way of color (it is really cool to watch), but I still want to add a few features before I release the source code (and I also need to test it to make sure it doesn't crash after 2 days or have some horrible memory leak in the host software or something...).

Since I am running linux, the host software was developed linux specific, but later I will add support for Windows since I plan on installing Windows on my computer for gaming at some point. There are two parts to the software: The device firmware and the host software. To minimize USB traffic, the firmware does the conversions from cpu usage to RGB and also the visual efffects. All the host software has to do is read the cpu usage and tell the device about it.

The hardware isn't incredibly complex: It uses an ATMega168A microcontroller (I am going to be aiming for a smaller 14-18 pin microcontroller eventually...this one is just too big and it would be a waste) to control some MOSFETs that turn on and off the LEDs. The LEDs I got were some $0.55 4-PLCC ones from Digikey which I have soldered some wires to and secured with hot glue (my first try looks awful with the hot glue everywhere...the 2nd one looks amazing since I figured out that hot glue melts before heat shrink shrinks so I could put the glue inside the heat shrink). There are 2 MOSFETs per LED channel in a complementary logic configuration. Since the LEDs are common anode, the MOSFETs control the cathode wire and so there isn't an inverting effect (put in a 1, get out a 0 and vice versa) like what usually happens with complementary logic. The whole system runs at 3.3V since I didn't have any 3.6V zener diodes to use for the USB pins to keep the voltage levels in check so that it would be able to talk to the computer. Apparently the voltage levels are very strict for USB and my first few tries of getting this to work didn't communicate with the computer because of the voltage levels coming out of the USB pins. After I changed the voltage to 3.3V it worked perfectly on the first try. Eventually this is going to connect to one of the internal USB connectors on the motherboard with power supplied by the same 4 pin connector I used for my white LEDs. I am debating running it entirely off USB power, but I am still not sure since that would limit any future expansion to 500mA of current draw and with the planned configuration it will be drawing between 250-300mA already.

Anyway...I plan on making a tutorial video of sorts along with pictures and schematics since in reality aside from the programming this was an easy project. I just need a week or two to get all the parts soldered together and the program finalized and then I'll know exactly how much this thing costs to build.

Recent posts

Search through tags with mini.pick in neovimWriting reusable USB device descriptors (and other constant data) with C++ constexpr

Using "access" types and "new" in VHDL

A New Blog

A good workflow and build system with OpenSCAD and Makefiles